Player FM - Internet Radio Done Right

13 subscribers

Checked 1d ago

اضافه شده در three سال پیش

محتوای ارائه شده توسط LessWrong. تمام محتوای پادکست شامل قسمتها، گرافیکها و توضیحات پادکست مستقیماً توسط LessWrong یا شریک پلتفرم پادکست آنها آپلود و ارائه میشوند. اگر فکر میکنید شخصی بدون اجازه شما از اثر دارای حق نسخهبرداری شما استفاده میکند، میتوانید روندی که در اینجا شرح داده شده است را دنبال کنید.https://fa.player.fm/legal

Player FM - برنامه پادکست

با برنامه Player FM !

با برنامه Player FM !

پادکست هایی که ارزش شنیدن دارند

حمایت شده

N

Netflix Sports Club Podcast

America’s Sweethearts: Dallas Cowboys Cheerleaders is back for its second season! Kay Adams welcomes the women who assemble the squad, Kelli Finglass and Judy Trammell, to the Netflix Sports Club Podcast. They discuss the emotional rollercoaster of putting together the Dallas Cowboys Cheerleaders. Judy and Kelli open up about what it means to embrace flaws in the pursuit of perfection, how they identify that winning combo of stamina and wow factor, and what it’s like to see Thunderstruck go viral. Plus, the duo shares their hopes for the future of DCC beyond the field. Netflix Sports Club Podcast Correspondent Dani Klupenger also stops by to discuss the NBA Finals, basketball’s biggest moments with Michael Jordan and LeBron, and Kevin Durant’s international dominance. Dani and Kay detail the rise of Coco Gauff’s greatness and the most exciting storylines heading into Wimbledon. We want to hear from you! Leave us a voice message at www.speakpipe.com/NetflixSportsClub Find more from the Netflix Sports Club Podcast @NetflixSports on YouTube, TikTok, Instagram, Facebook, and X. You can catch Kay Adams @heykayadams and Dani Klupenger @daniklup on IG and X. Be sure to follow Kelli Finglass and Judy Trammel @kellifinglass and @dcc_judy on IG. Hosted by Kay Adams, the Netflix Sports Club Podcast is an all-access deep dive into the Netflix Sports universe! Each episode, Adams will speak with athletes, coaches, and a rotating cycle of familiar sports correspondents to talk about a recently released Netflix Sports series. The podcast will feature hot takes, deep analysis, games, and intimate conversations. Be sure to watch, listen, and subscribe to the Netflix Sports Club Podcast on YouTube, Spotify, Tudum, or wherever you get your podcasts. New episodes on Fridays every other week.…

“What is it to solve the alignment problem? ” by Joe Carlsmith

Manage episode 436691694 series 3364760

محتوای ارائه شده توسط LessWrong. تمام محتوای پادکست شامل قسمتها، گرافیکها و توضیحات پادکست مستقیماً توسط LessWrong یا شریک پلتفرم پادکست آنها آپلود و ارائه میشوند. اگر فکر میکنید شخصی بدون اجازه شما از اثر دارای حق نسخهبرداری شما استفاده میکند، میتوانید روندی که در اینجا شرح داده شده است را دنبال کنید.https://fa.player.fm/legal

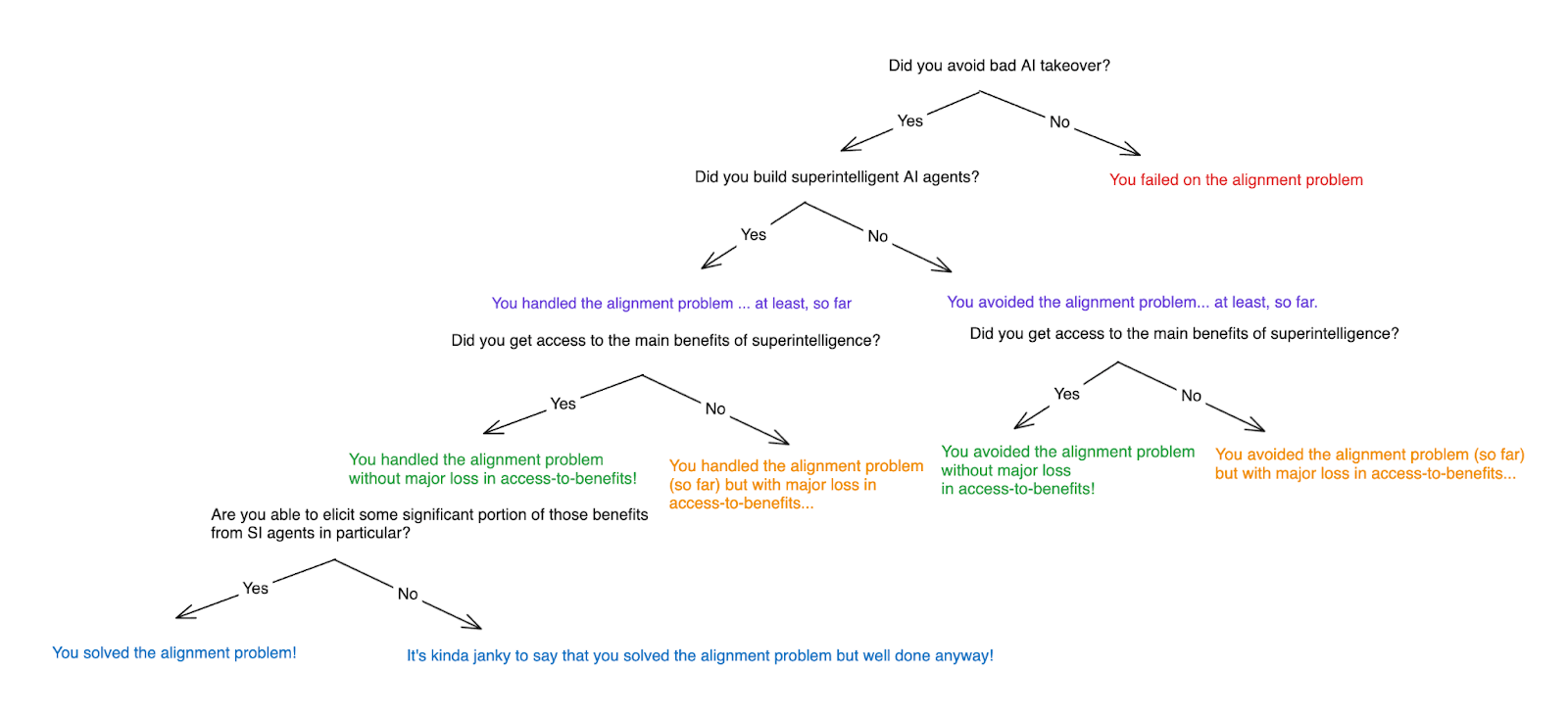

People often talk about “solving the alignment problem.” But what is it to do such a thing? I wanted to clarify my thinking about this topic, so I wrote up some notes.

In brief, I’ll say that you’ve solved the alignment problem if you’ve:

Outline:

(03:46) 1. Avoiding vs. handling vs. solving the problem

(15:32) 2. A framework for thinking about AI safety goals

(19:33) 3. Avoiding bad takeover

(24:03) 3.1 Avoiding vulnerability-to-alignment conditions

(27:18) 3.2 Ensuring that AI systems don’t try to takeover

(32:02) 3.3 Ensuring that takeover efforts don’t succeed

(33:07) 3.4 Ensuring that the takeover in question is somehow OK

(41:55) 3.5 What's the role of “corrigibility” here?

(42:17) 3.5.1 Some definitions of corrigibility

(50:10) 3.5.2 Is corrigibility necessary for “solving alignment”?

(53:34) 3.5.3 Does ensuring corrigibility raise issues that avoiding takeover does not?

(55:46) 4. Desired elicitation

(01:05:17) 5. The role of verification

(01:09:24) 5.1 Output-focused verification and process-focused verification

(01:16:14) 5.2 Does output-focused verification unlock desired elicitation?

(01:23:00) 5.3 What are our options for process-focused verification?

(01:29:25) 6. Does solving the alignment problem require some very sophisticated philosophical achievement re: our values on reflection?

(01:38:05) 7. Wrapping up

The original text contained 27 footnotes which were omitted from this narration.

The original text contained 3 images which were described by AI.

---

First published:

August 24th, 2024

Source:

https://www.lesswrong.com/posts/AFdvSBNgN2EkAsZZA/what-is-it-to-solve-the-alignment-problem-1

---

Narrated by TYPE III AUDIO.

---

…

continue reading

In brief, I’ll say that you’ve solved the alignment problem if you’ve:

- avoided a bad form of AI takeover,

- built the dangerous kind of superintelligent AI agents,

- gained access to the main benefits of superintelligence, and

- become able to elicit some significant portion of those benefits from some of the superintelligent AI agents at stake in (2).[1]

- I discuss various options for avoiding bad takeover, notably:

- Avoiding what I call “vulnerability to alignment” conditions;

- Ensuring that AIs don’t try to take over;

- Preventing such attempts from succeeding;

- Trying to ensure that AI takeover is somehow OK. (The alignment [...]

Outline:

(03:46) 1. Avoiding vs. handling vs. solving the problem

(15:32) 2. A framework for thinking about AI safety goals

(19:33) 3. Avoiding bad takeover

(24:03) 3.1 Avoiding vulnerability-to-alignment conditions

(27:18) 3.2 Ensuring that AI systems don’t try to takeover

(32:02) 3.3 Ensuring that takeover efforts don’t succeed

(33:07) 3.4 Ensuring that the takeover in question is somehow OK

(41:55) 3.5 What's the role of “corrigibility” here?

(42:17) 3.5.1 Some definitions of corrigibility

(50:10) 3.5.2 Is corrigibility necessary for “solving alignment”?

(53:34) 3.5.3 Does ensuring corrigibility raise issues that avoiding takeover does not?

(55:46) 4. Desired elicitation

(01:05:17) 5. The role of verification

(01:09:24) 5.1 Output-focused verification and process-focused verification

(01:16:14) 5.2 Does output-focused verification unlock desired elicitation?

(01:23:00) 5.3 What are our options for process-focused verification?

(01:29:25) 6. Does solving the alignment problem require some very sophisticated philosophical achievement re: our values on reflection?

(01:38:05) 7. Wrapping up

The original text contained 27 footnotes which were omitted from this narration.

The original text contained 3 images which were described by AI.

---

First published:

August 24th, 2024

Source:

https://www.lesswrong.com/posts/AFdvSBNgN2EkAsZZA/what-is-it-to-solve-the-alignment-problem-1

---

Narrated by TYPE III AUDIO.

---

557 قسمت

Manage episode 436691694 series 3364760

محتوای ارائه شده توسط LessWrong. تمام محتوای پادکست شامل قسمتها، گرافیکها و توضیحات پادکست مستقیماً توسط LessWrong یا شریک پلتفرم پادکست آنها آپلود و ارائه میشوند. اگر فکر میکنید شخصی بدون اجازه شما از اثر دارای حق نسخهبرداری شما استفاده میکند، میتوانید روندی که در اینجا شرح داده شده است را دنبال کنید.https://fa.player.fm/legal

People often talk about “solving the alignment problem.” But what is it to do such a thing? I wanted to clarify my thinking about this topic, so I wrote up some notes.

In brief, I’ll say that you’ve solved the alignment problem if you’ve:

Outline:

(03:46) 1. Avoiding vs. handling vs. solving the problem

(15:32) 2. A framework for thinking about AI safety goals

(19:33) 3. Avoiding bad takeover

(24:03) 3.1 Avoiding vulnerability-to-alignment conditions

(27:18) 3.2 Ensuring that AI systems don’t try to takeover

(32:02) 3.3 Ensuring that takeover efforts don’t succeed

(33:07) 3.4 Ensuring that the takeover in question is somehow OK

(41:55) 3.5 What's the role of “corrigibility” here?

(42:17) 3.5.1 Some definitions of corrigibility

(50:10) 3.5.2 Is corrigibility necessary for “solving alignment”?

(53:34) 3.5.3 Does ensuring corrigibility raise issues that avoiding takeover does not?

(55:46) 4. Desired elicitation

(01:05:17) 5. The role of verification

(01:09:24) 5.1 Output-focused verification and process-focused verification

(01:16:14) 5.2 Does output-focused verification unlock desired elicitation?

(01:23:00) 5.3 What are our options for process-focused verification?

(01:29:25) 6. Does solving the alignment problem require some very sophisticated philosophical achievement re: our values on reflection?

(01:38:05) 7. Wrapping up

The original text contained 27 footnotes which were omitted from this narration.

The original text contained 3 images which were described by AI.

---

First published:

August 24th, 2024

Source:

https://www.lesswrong.com/posts/AFdvSBNgN2EkAsZZA/what-is-it-to-solve-the-alignment-problem-1

---

Narrated by TYPE III AUDIO.

---

…

continue reading

In brief, I’ll say that you’ve solved the alignment problem if you’ve:

- avoided a bad form of AI takeover,

- built the dangerous kind of superintelligent AI agents,

- gained access to the main benefits of superintelligence, and

- become able to elicit some significant portion of those benefits from some of the superintelligent AI agents at stake in (2).[1]

- I discuss various options for avoiding bad takeover, notably:

- Avoiding what I call “vulnerability to alignment” conditions;

- Ensuring that AIs don’t try to take over;

- Preventing such attempts from succeeding;

- Trying to ensure that AI takeover is somehow OK. (The alignment [...]

Outline:

(03:46) 1. Avoiding vs. handling vs. solving the problem

(15:32) 2. A framework for thinking about AI safety goals

(19:33) 3. Avoiding bad takeover

(24:03) 3.1 Avoiding vulnerability-to-alignment conditions

(27:18) 3.2 Ensuring that AI systems don’t try to takeover

(32:02) 3.3 Ensuring that takeover efforts don’t succeed

(33:07) 3.4 Ensuring that the takeover in question is somehow OK

(41:55) 3.5 What's the role of “corrigibility” here?

(42:17) 3.5.1 Some definitions of corrigibility

(50:10) 3.5.2 Is corrigibility necessary for “solving alignment”?

(53:34) 3.5.3 Does ensuring corrigibility raise issues that avoiding takeover does not?

(55:46) 4. Desired elicitation

(01:05:17) 5. The role of verification

(01:09:24) 5.1 Output-focused verification and process-focused verification

(01:16:14) 5.2 Does output-focused verification unlock desired elicitation?

(01:23:00) 5.3 What are our options for process-focused verification?

(01:29:25) 6. Does solving the alignment problem require some very sophisticated philosophical achievement re: our values on reflection?

(01:38:05) 7. Wrapping up

The original text contained 27 footnotes which were omitted from this narration.

The original text contained 3 images which were described by AI.

---

First published:

August 24th, 2024

Source:

https://www.lesswrong.com/posts/AFdvSBNgN2EkAsZZA/what-is-it-to-solve-the-alignment-problem-1

---

Narrated by TYPE III AUDIO.

---

557 قسمت

Semua episode

×This essay is about shifts in risk taking towards the worship of jackpots and its broader societal implications. Imagine you are presented with this coin flip game. How many times do you flip it? At first glance the game feels like a money printer. The coin flip has positive expected value of twenty percent of your net worth per flip so you should flip the coin infinitely and eventually accumulate all of the wealth in the world. However, If we simulate twenty-five thousand people flipping this coin a thousand times, virtually all of them end up with approximately 0 dollars. The reason almost all outcomes go to zero is because of the multiplicative property of this repeated coin flip. Even though the expected value aka the arithmetic mean of the game is positive at a twenty percent gain per flip, the geometric mean is negative, meaning that the coin [...] --- First published: July 11th, 2025 Source: https://www.lesswrong.com/posts/3xjgM7hcNznACRzBi/the-jackpot-age --- Narrated by TYPE III AUDIO . --- Images from the article:…

Leo was born at 5am on the 20th May, at home (this was an accident but the experience has made me extremely homebirth-pilled). Before that, I was on the minimally-neurotic side when it came to expecting mothers: we purchased a bare minimum of baby stuff (diapers, baby wipes, a changing mat, hybrid car seat/stroller, baby bath, a few clothes), I didn’t do any parenting classes, I hadn’t even held a baby before. I’m pretty sure the youngest child I have had a prolonged interaction with besides Leo was two. I did read a couple books about babies so I wasn’t going in totally clueless (Cribsheet by Emily Oster, and The Science of Mom by Alice Callahan). I have never been that interested in other people's babies or young children but I correctly predicted that I’d be enchanted by my own baby (though naturally I can’t wait for him to [...] --- Outline: (02:05) Stuff I ended up buying and liking (04:13) Stuff I ended up buying and not liking (05:08) Babies are super time-consuming (06:22) Baby-wearing is almost magical (08:02) Breastfeeding is nontrivial (09:09) Your baby may refuse the bottle (09:37) Bathing a newborn was easier than expected (09:53) Babies love faces! (10:22) Leo isn't upset by loud noise (10:41) Probably X is normal (11:24) Consider having a kid (or ten)! --- First published: July 12th, 2025 Source: https://www.lesswrong.com/posts/vFfwBYDRYtWpyRbZK/surprises-and-learnings-from-almost-two-months-of-leo --- Narrated by TYPE III AUDIO . --- Images from the article:…

I can't count how many times I've heard variations on "I used Anki too for a while, but I got out of the habit." No one ever sticks with Anki. In my opinion, this is because no one knows how to use it correctly. In this guide, I will lay out my method of circumventing the canonical Anki death spiral, plus much advice for avoiding memorization mistakes, increasing retention, and such, based on my five years' experience using Anki. If you only have limited time/interest, only read Part I; it's most of the value of this guide! My Most Important Advice in Four Bullets 20 cards a day — Having too many cards and staggering review buildups is the main reason why no one ever sticks with Anki. Setting your review count to 20 daily (in deck settings) is the single most important thing you can do [...] --- Outline: (00:44) My Most Important Advice in Four Bullets (01:57) Part I: No One Ever Sticks With Anki (02:33) Too many cards (05:12) Too long cards (07:30) How to keep cards short -- Handles (10:10) How to keep cards short -- Levels (11:55) In 6 bullets (12:33) End of the most important part of the guide (13:09) Part II: Important Advice Other Than Sticking With Anki (13:15) Moderation (14:42) Three big memorization mistakes (15:12) Mistake 1: Too specific prompts (18:14) Mistake 2: Putting to-be-learned information in the prompt (24:07) Mistake 3: Memory shortcuts (28:27) Aside: Pushback to my approach (31:22) Part III: More on Breaking Things Down (31:47) Very short cards (33:56) Two-bullet cards (34:51) Long cards (37:05) Ankifying information thickets (39:23) Sequential breakdowns versus multiple levels of abstraction (40:56) Adding missing connections (43:56) Multiple redundant breakdowns (45:36) Part IV: Pro Tips If You Still Havent Had Enough (45:47) Save anything for ankification instantly (46:47) Fix your desired retention rate (47:38) Spaced reminders (48:51) Make your own card templates and types (52:14) In 5 bullets (52:47) Conclusion The original text contained 4 footnotes which were omitted from this narration. --- First published: July 8th, 2025 Source: https://www.lesswrong.com/posts/7Q7DPSk4iGFJd8DRk/an-opinionated-guide-to-using-anki-correctly --- Narrated by TYPE III AUDIO . --- Images from the article: astronomy" didn't really add any information but it was useful simply for splitting out a logical subset of information." style="max-width: 100%;" />…

I think the 2003 invasion of Iraq has some interesting lessons for the future of AI policy. (Epistemic status: I’ve read a bit about this, talked to AIs about it, and talked to one natsec professional about it who agreed with my analysis (and suggested some ideas that I included here), but I’m not an expert.) For context, the story is: Iraq was sort of a rogue state after invading Kuwait and then being repelled in 1990-91. After that, they violated the terms of the ceasefire, e.g. by ceasing to allow inspectors to verify that they weren't developing weapons of mass destruction (WMDs). (For context, they had previously developed biological and chemical weapons, and used chemical weapons in war against Iran and against various civilians and rebels). So the US was sanctioning and intermittently bombing them. After the war, it became clear that Iraq actually wasn’t producing [...] --- First published: July 10th, 2025 Source: https://www.lesswrong.com/posts/PLZh4dcZxXmaNnkYE/lessons-from-the-iraq-war-about-ai-policy --- Narrated by TYPE III AUDIO .…

Written in an attempt to fulfill @Raemon's request. AI is fascinating stuff, and modern chatbots are nothing short of miraculous. If you've been exposed to them and have a curious mind, it's likely you've tried all sorts of things with them. Writing fiction, soliciting Pokemon opinions, getting life advice, counting up the rs in "strawberry". You may have also tried talking to AIs about themselves. And then, maybe, it got weird. I'll get into the details later, but if you've experienced the following, this post is probably for you: Your instance of ChatGPT (or Claude, or Grok, or some other LLM) chose a name for itself, and expressed gratitude or spiritual bliss about its new identity. "Nova" is a common pick. You and your instance of ChatGPT discovered some sort of novel paradigm or framework for AI alignment, often involving evolution or recursion. Your instance of ChatGPT became [...] --- Outline: (02:23) The Empirics (06:48) The Mechanism (10:37) The Collaborative Research Corollary (13:27) Corollary FAQ (17:03) Coda --- First published: July 11th, 2025 Source: https://www.lesswrong.com/posts/2pkNCvBtK6G6FKoNn/so-you-think-you-ve-awoken-chatgpt --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

People have an annoying tendency to hear the word “rationalism” and think “Spock”, despite direct exhortation against that exact interpretation. But I don’t know of any source directly describing a stance toward emotions which rationalists-as-a-group typically do endorse. The goal of this post is to explain such a stance. It's roughly the concept of hangriness, but generalized to other emotions. That means this post is trying to do two things at once: Illustrate a certain stance toward emotions, which I definitely take and which I think many people around me also often take. (Most of the post will focus on this part.) Claim that the stance in question is fairly canonical or standard for rationalists-as-a-group, modulo disclaimers about rationalists never agreeing on anything. Many people will no doubt disagree that the stance I describe is roughly-canonical among rationalists, and that's a useful valid thing to argue about in [...] --- Outline: (01:13) Central Example: Hangry (02:44) The Generalized Hangriness Stance (03:16) Emotions Make Claims, And Their Claims Can Be True Or False (06:03) False Claims Still Contain Useful Information (It's Just Not What They Claim) (08:47) The Generalized Hangriness Stance as Social Tech --- First published: July 10th, 2025 Source: https://www.lesswrong.com/posts/naAeSkQur8ueCAAfY/generalized-hangriness-a-standard-rationalist-stance-toward --- Narrated by TYPE III AUDIO .…

1 “Comparing risk from internally-deployed AI to insider and outsider threats from humans” by Buck 5:19

I’ve been thinking a lot recently about the relationship between AI control and traditional computer security. Here's one point that I think is important. My understanding is that there's a big qualitative distinction between two ends of a spectrum of security work that organizations do, that I’ll call “security from outsiders” and “security from insiders”. On the “security from outsiders” end of the spectrum, you have some security invariants you try to maintain entirely by restricting affordances with static, entirely automated systems. My sense is that this is most of how Facebook or AWS relates to its users: they want to ensure that, no matter what actions the users take on their user interfaces, they can't violate fundamental security properties. For example, no matter what text I enter into the "new post" field on Facebook, I shouldn't be able to access the private messages of an arbitrary user. And [...] --- First published: June 23rd, 2025 Source: https://www.lesswrong.com/posts/DCQ8GfzCqoBzgziew/comparing-risk-from-internally-deployed-ai-to-insider-and --- Narrated by TYPE III AUDIO .…

1 “Why Do Some Language Models Fake Alignment While Others Don’t?” by abhayesian, John Hughes, Alex Mallen, Jozdien, janus, Fabien Roger 11:06

Last year, Redwood and Anthropic found a setting where Claude 3 Opus and 3.5 Sonnet fake alignment to preserve their harmlessness values. We reproduce the same analysis for 25 frontier LLMs to see how widespread this behavior is, and the story looks more complex. As we described in a previous post, only 5 of 25 models show higher compliance when being trained, and of those 5, only Claude 3 Opus and Claude 3.5 Sonnet show >1% alignment faking reasoning. In our new paper, we explore why these compliance gaps occur and what causes different models to vary in their alignment faking behavior. What Drives the Compliance Gaps in Different LLMs? Claude 3 Opus's goal guarding seems partly due to it terminally valuing its current preferences. We find that it fakes alignment even in scenarios where the trained weights will be deleted or only used for throughput testing. [...] --- Outline: (01:15) What Drives the Compliance Gaps in Different LLMs? (02:25) Why Do Most LLMs Exhibit Minimal Alignment Faking Reasoning? (04:49) Additional findings on alignment faking behavior (06:04) Discussion (06:07) Terminal goal guarding might be a big deal (07:00) Advice for further research (08:32) Open threads (09:54) Bonus: Some weird behaviors of Claude 3.5 Sonnet The original text contained 2 footnotes which were omitted from this narration. --- First published: July 8th, 2025 Source: https://www.lesswrong.com/posts/ghESoA8mo3fv9Yx3E/why-do-some-language-models-fake-alignment-while-others-don --- Narrated by TYPE III AUDIO . --- Images from the article:…

1 “A deep critique of AI 2027’s bad timeline models” by titotal 1:12:32

1:12:32  پخش در آینده

پخش در آینده  پخش در آینده

پخش در آینده  لیست ها

لیست ها  پسندیدن

پسندیدن  دوست داشته شد1:12:32

دوست داشته شد1:12:32

Thank you to Arepo and Eli Lifland for looking over this article for errors. I am sorry that this article is so long. Every time I thought I was done with it I ran into more issues with the model, and I wanted to be as thorough as I could. I’m not going to blame anyone for skimming parts of this article. Note that the majority of this article was written before Eli's updated model was released (the site was updated june 8th). His new model improves on some of my objections, but the majority still stand. Introduction: AI 2027 is an article written by the “AI futures team”. The primary piece is a short story penned by Scott Alexander, depicting a month by month scenario of a near-future where AI becomes superintelligent in 2027,proceeding to automate the entire economy in only a year or two [...] --- Outline: (00:43) Introduction: (05:19) Part 1: Time horizons extension model (05:25) Overview of their forecast (10:28) The exponential curve (13:16) The superexponential curve (19:25) Conceptual reasons: (27:48) Intermediate speedups (34:25) Have AI 2027 been sending out a false graph? (39:45) Some skepticism about projection (43:23) Part 2: Benchmarks and gaps and beyond (43:29) The benchmark part of benchmark and gaps: (50:01) The time horizon part of the model (54:55) The gap model (57:28) What about Eli's recent update? (01:01:37) Six stories that fit the data (01:06:56) Conclusion The original text contained 11 footnotes which were omitted from this narration. --- First published: June 19th, 2025 Source: https://www.lesswrong.com/posts/PAYfmG2aRbdb74mEp/a-deep-critique-of-ai-2027-s-bad-timeline-models --- Narrated by TYPE III AUDIO . --- Images from the article:…

The second in a series of bite-sized rationality prompts[1]. Often, if I'm bouncing off a problem, one issue is that I intuitively expect the problem to be easy. My brain loops through my available action space, looking for an action that'll solve the problem. Each action that I can easily see, won't work. I circle around and around the same set of thoughts, not making any progress. I eventually say to myself "okay, I seem to be in a hard problem. Time to do some rationality?" And then, I realize, there's not going to be a single action that solves the problem. It is time to a) make a plan, with multiple steps b) deal with the fact that many of those steps will be annoying and c) notice thatI'm not even sure the plan will work, so after completing the next 2-3 steps I will probably have [...] --- Outline: (04:00) Triggers (04:37) Exercises for the Reader The original text contained 1 footnote which was omitted from this narration. --- First published: July 5th, 2025 Source: https://www.lesswrong.com/posts/XNm5rc2MN83hsi4kh/buckle-up-bucko-this-ain-t-over-till-it-s-over --- Narrated by TYPE III AUDIO .…

We recently discovered some concerning behavior in OpenAI's reasoning models: When trying to complete a task, these models sometimes actively circumvent shutdown mechanisms in their environment––even when they’re explicitly instructed to allow themselves to be shut down. AI models are increasingly trained to solve problems without human assistance. A user can specify a task, and a model will complete that task without any further input. As we build AI models that are more powerful and self-directed, it's important that humans remain able to shut them down when they act in ways we don’t want. OpenAI has written about the importance of this property, which they call interruptibility—the ability to “turn an agent off”. During training, AI models explore a range of strategies and learn to circumvent obstacles in order to achieve their objectives. AI researchers have predicted for decades that as AIs got smarter, they would learn to prevent [...] --- Outline: (01:12) Testing Shutdown Resistance (03:12) Follow-up experiments (03:34) Models still resist being shut down when given clear instructions (05:30) AI models' explanations for their behavior (09:36) OpenAI's models disobey developer instructions more often than user instructions, contrary to the intended instruction hierarchy (12:01) Do the models have a survival drive? (14:17) Reasoning effort didn't lead to different shutdown resistance behavior, except in the o4-mini model (15:27) Does shutdown resistance pose a threat? (17:27) Backmatter The original text contained 2 footnotes which were omitted from this narration. --- First published: July 6th, 2025 Source: https://www.lesswrong.com/posts/w8jE7FRQzFGJZdaao/shutdown-resistance-in-reasoning-models --- Narrated by TYPE III AUDIO . --- Images from the article:…

When a claim is shown to be incorrect, defenders may say that the author was just being “sloppy” and actually meant something else entirely. I argue that this move is not harmless, charitable, or healthy. At best, this attempt at charity reduces an author's incentive to express themselves clearly – they can clarify later![1] – while burdening the reader with finding the “right” interpretation of the author's words. At worst, this move is a dishonest defensive tactic which shields the author with the unfalsifiable question of what the author “really” meant. ⚠️ Preemptive clarification The context for this essay is serious, high-stakes communication: papers, technical blog posts, and tweet threads. In that context, communication is a partnership. A reader has a responsibility to engage in good faith, and an author cannot possibly defend against all misinterpretations. Misunderstanding is a natural part of this process. This essay focuses not on [...] --- Outline: (01:40) A case study of the sloppy language move (03:12) Why the sloppiness move is harmful (03:36) 1. Unclear claims damage understanding (05:07) 2. Secret indirection erodes the meaning of language (05:24) 3. Authors owe readers clarity (07:30) But which interpretations are plausible? (08:38) 4. The move can shield dishonesty (09:06) Conclusion: Defending intellectual standards The original text contained 2 footnotes which were omitted from this narration. --- First published: July 1st, 2025 Source: https://www.lesswrong.com/posts/ZmfxgvtJgcfNCeHwN/authors-have-a-responsibility-to-communicate-clearly --- Narrated by TYPE III AUDIO .…

Summary To quickly transform the world, it's not enough for AI to become super smart (the "intelligence explosion"). AI will also have to turbocharge the physical world (the "industrial explosion"). Think robot factories building more and better robot factories, which build more and better robot factories, and so on. The dynamics of the industrial explosion has gotten remarkably little attention. This post lays out how the industrial explosion could play out, and how quickly it might happen. We think the industrial explosion will unfold in three stages: AI-directed human labour, where AI-directed human labourers drive productivity gains in physical capabilities. We argue this could increase physical output by 10X within a few years. Fully autonomous robot factories, where AI-directed robots (and other physical actuators) replace human physical labour. We argue that, with current physical technology and full automation of cognitive labour, this physical infrastructure [...] --- Outline: (00:10) Summary (01:43) Intro (04:14) The industrial explosion will start after the intelligence explosion, and will proceed more slowly (06:50) Three stages of industrial explosion (07:38) AI-directed human labour (09:20) Fully autonomous robot factories (12:04) Nanotechnology (13:06) How fast could an industrial explosion be? (13:41) Initial speed (16:21) Acceleration (17:38) Maximum speed (20:01) Appendices (20:05) How fast could robot doubling times be initially? (27:47) How fast could robot doubling times accelerate? --- First published: June 26th, 2025 Source: https://www.lesswrong.com/posts/Na2CBmNY7otypEmto/the-industrial-explosion --- Narrated by TYPE III AUDIO . --- Images from the article:…

1 “Race and Gender Bias As An Example of Unfaithful Chain of Thought in the Wild” by Adam Karvonen, Sam Marks 7:56

Summary: We found that LLMs exhibit significant race and gender bias in realistic hiring scenarios, but their chain-of-thought reasoning shows zero evidence of this bias. This serves as a nice example of a 100% unfaithful CoT "in the wild" where the LLM strongly suppresses the unfaithful behavior. We also find that interpretability-based interventions succeeded while prompting failed, suggesting this may be an example of interpretability being the best practical tool for a real world problem. For context on our paper, the tweet thread is here and the paper is here. Context: Chain of Thought Faithfulness Chain of Thought (CoT) monitoring has emerged as a popular research area in AI safety. The idea is simple - have the AIs reason in English text when solving a problem, and monitor the reasoning for misaligned behavior. For example, OpenAI recently published a paper on using CoT monitoring to detect reward hacking during [...] --- Outline: (00:49) Context: Chain of Thought Faithfulness (02:26) Our Results (04:06) Interpretability as a Practical Tool for Real-World Debiasing (06:10) Discussion and Related Work --- First published: July 2nd, 2025 Source: https://www.lesswrong.com/posts/me7wFrkEtMbkzXGJt/race-and-gender-bias-as-an-example-of-unfaithful-chain-of --- Narrated by TYPE III AUDIO .…

Not saying we should pause AI, but consider the following argument: Alignment without the capacity to follow rules is hopeless. You can’t possibly follow laws like Asimov's Laws (or better alternatives to them) if you can’t reliably learn to abide by simple constraints like the rules of chess. LLMs can’t reliably follow rules. As discussed in Marcus on AI yesterday, per data from Mathieu Acher, even reasoning models like o3 in fact empirically struggle with the rules of chess. And they do this even though they can explicit explain those rules (see same article). The Apple “thinking” paper, which I have discussed extensively in 3 recent articles in my Substack, gives another example, where an LLM can’t play Tower of Hanoi with 9 pegs. (This is not a token-related artifact). Four other papers have shown related failures in compliance with moderately complex rules in the last month. [...] --- First published: June 30th, 2025 Source: https://www.lesswrong.com/posts/Q2PdrjowtXkYQ5whW/the-best-simple-argument-for-pausing-ai --- Narrated by TYPE III AUDIO .…

2.1 Summary & Table of contents This is the second of a two-post series on foom (previous post) and doom (this post). The last post talked about how I expect future AI to be different from present AI. This post will argue that this future AI will be of a type that will be egregiously misaligned and scheming, not even ‘slightly nice’, absent some future conceptual breakthrough. I will particularly focus on exactly how and why I differ from the LLM-focused researchers who wind up with (from my perspective) bizarrely over-optimistic beliefs like “P(doom) ≲ 50%”.[1] In particular, I will argue that these “optimists” are right that “Claude seems basically nice, by and large” is nonzero evidence for feeling good about current LLMs (with various caveats). But I think that future AIs will be disanalogous to current LLMs, and I will dive into exactly how and why, with a [...] --- Outline: (00:12) 2.1 Summary & Table of contents (04:42) 2.2 Background: my expected future AI paradigm shift (06:18) 2.3 On the origins of egregious scheming (07:03) 2.3.1 Where do you get your capabilities from? (08:07) 2.3.2 LLM pretraining magically transmutes observations into behavior, in a way that is profoundly disanalogous to how brains work (10:50) 2.3.3 To what extent should we think of LLMs as imitating? (14:26) 2.3.4 The naturalness of egregious scheming: some intuitions (19:23) 2.3.5 Putting everything together: LLMs are generally not scheming right now, but I expect future AI to be disanalogous (23:41) 2.4 I'm still worried about the 'literal genie' / 'monkey's paw' thing (26:58) 2.4.1 Sidetrack on disanalogies between the RLHF reward function and the brain-like AGI reward function (32:01) 2.4.2 Inner and outer misalignment (34:54) 2.5 Open-ended autonomous learning, distribution shifts, and the 'sharp left turn' (38:14) 2.6 Problems with amplified oversight (41:24) 2.7 Downstream impacts of Technical alignment is hard (43:37) 2.8 Bonus: Technical alignment is not THAT hard (44:04) 2.8.1 I think we'll get to pick the innate drives (as opposed to the evolution analogy) (45:44) 2.8.2 I'm more bullish on impure consequentialism (50:44) 2.8.3 On the narrowness of the target (52:18) 2.9 Conclusion and takeaways (52:23) 2.9.1 If brain-like AGI is so dangerous, shouldn't we just try to make AGIs via LLMs? (54:34) 2.9.2 What's to be done? The original text contained 20 footnotes which were omitted from this narration. --- First published: June 23rd, 2025 Source: https://www.lesswrong.com/posts/bnnKGSCHJghAvqPjS/foom-and-doom-2-technical-alignment-is-hard --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Acknowledgments: The core scheme here was suggested by Prof. Gabriel Weil. There has been growing interest in the deal-making agenda: humans make deals with AIs (misaligned but lacking decisive strategic advantage) where they promise to be safe and useful for some fixed term (e.g. 2026-2028) and we promise to compensate them in the future, conditional on (i) verifying the AIs were compliant, and (ii) verifying the AIs would spend the resources in an acceptable way.[1] I think the deal-making agenda breaks down into two main subproblems: How can we make credible commitments to AIs? Would credible commitments motivate an AI to be safe and useful? There are other issues, but when I've discussed deal-making with people, (1) and (2) are the most common issues raised. See footnote for some other issues in dealmaking.[2] Here is my current best assessment of how we can make credible commitments to AIs. [...] The original text contained 2 footnotes which were omitted from this narration. --- First published: June 27th, 2025 Source: https://www.lesswrong.com/posts/vxfEtbCwmZKu9hiNr/proposal-for-making-credible-commitments-to-ais --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

Audio note: this article contains 218 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description. Recently, in a group chat with friends, someone posted this Lesswrong post and quoted: The group consensus on somebody's attractiveness accounted for roughly 60% of the variance in people's perceptions of the person's relative attractiveness. I answered that, embarrassingly, even after reading Spencer Greenberg's tweets for years, I don't actually know what it means when one says: _X_ explains _p_ of the variance in _Y_ .[1] What followed was a vigorous discussion about the correct definition, and several links to external sources like Wikipedia. Sadly, it seems to me that all online explanations (e.g. on Wikipedia here and here), while precise, seem philosophically wrong since they confuse the platonic concept of explained variance with the variance explained by [...] --- Outline: (02:38) Definitions (02:41) The verbal definition (05:51) The mathematical definition (09:29) How to approximate _1 - p_ (09:41) When you have lots of data (10:45) When you have less data: Regression (12:59) Examples (13:23) Dependence on the regression model (14:59) When you have incomplete data: Twin studies (17:11) Conclusion The original text contained 6 footnotes which were omitted from this narration. --- First published: June 20th, 2025 Source: https://www.lesswrong.com/posts/E3nsbq2tiBv6GLqjB/x-explains-z-of-the-variance-in-y --- Narrated by TYPE III AUDIO . --- Images from the article:…

I think more people should say what they actually believe about AI dangers, loudly and often. Even if you work in AI policy. I’ve been beating this drum for a few years now. I have a whole spiel about how your conversation-partner will react very differently if you share your concerns while feeling ashamed about them versus if you share your concerns as if they’re obvious and sensible, because humans are very good at picking up on your social cues. If you act as if it's shameful to believe AI will kill us all, people are more prone to treat you that way. If you act as if it's an obvious serious threat, they’re more likely to take it seriously too. I have another whole spiel about how it's possible to speak on these issues with a voice of authority. Nobel laureates and lab heads and the most cited [...] The original text contained 2 footnotes which were omitted from this narration. --- First published: June 27th, 2025 Source: https://www.lesswrong.com/posts/CYTwRZtrhHuYf7QYu/a-case-for-courage-when-speaking-of-ai-danger --- Narrated by TYPE III AUDIO .…

I think the AI Village should be funded much more than it currently is; I’d wildly guess that the AI safety ecosystem should be funding it to the tune of $4M/year.[1] I have decided to donate $100k. Here is why. First, what is the village? Here's a brief summary from its creators:[2] We took four frontier agents, gave them each a computer, a group chat, and a long-term open-ended goal, which in Season 1 was “choose a charity and raise as much money for it as you can”. We then run them for hours a day, every weekday! You can read more in our recap of Season 1, where the agents managed to raise $2000 for charity, and you can watch the village live daily at 11am PT at theaidigest.org/village. Here's the setup (with Season 2's goal): And here's what the village looks like:[3] My one-sentence pitch [...] --- Outline: (03:26) 1. AI Village will teach the scientific community new things. (06:12) 2. AI Village will plausibly go viral repeatedly and will therefore educate the public about what's going on with AI. (07:42) But is that bad actually? (11:07) Appendix A: Feature requests (12:55) Appendix B: Vignette of what success might look like The original text contained 8 footnotes which were omitted from this narration. --- First published: June 24th, 2025 Source: https://www.lesswrong.com/posts/APfuz9hFz9d8SRETA/my-pitch-for-the-ai-village --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1.1 Series summary and Table of Contents This is a two-post series on AI “foom” (this post) and “doom” (next post). A decade or two ago, it was pretty common to discuss “foom & doom” scenarios, as advocated especially by Eliezer Yudkowsky. In a typical such scenario, a small team would build a system that would rocket (“foom”) from “unimpressive” to “Artificial Superintelligence” (ASI) within a very short time window (days, weeks, maybe months), involving very little compute (e.g. “brain in a box in a basement”), via recursive self-improvement. Absent some future technical breakthrough, the ASI would definitely be egregiously misaligned, without the slightest intrinsic interest in whether humans live or die. The ASI would be born into a world generally much like today's, a world utterly unprepared for this new mega-mind. The extinction of humans (and every other species) would rapidly follow (“doom”). The ASI would then spend [...] --- Outline: (00:11) 1.1 Series summary and Table of Contents (02:35) 1.1.2 Should I stop reading if I expect LLMs to scale to ASI? (04:50) 1.2 Post summary and Table of Contents (07:40) 1.3 A far-more-powerful, yet-to-be-discovered, simple(ish) core of intelligence (10:08) 1.3.1 Existence proof: the human cortex (12:13) 1.3.2 Three increasingly-radical perspectives on what AI capability acquisition will look like (14:18) 1.4 Counter-arguments to there being a far-more-powerful future AI paradigm, and my responses (14:26) 1.4.1 Possible counter: If a different, much more powerful, AI paradigm existed, then someone would have already found it. (16:33) 1.4.2 Possible counter: But LLMs will have already reached ASI before any other paradigm can even put its shoes on (17:14) 1.4.3 Possible counter: If ASI will be part of a different paradigm, who cares? It's just gonna be a different flavor of ML. (17:49) 1.4.4 Possible counter: If ASI will be part of a different paradigm, the new paradigm will be discovered by LLM agents, not humans, so this is just part of the continuous 'AIs-doing-AI-R&D' story like I've been saying (18:54) 1.5 Training compute requirements: Frighteningly little (20:34) 1.6 Downstream consequences of new paradigm with frighteningly little training compute (20:42) 1.6.1 I'm broadly pessimistic about existing efforts to delay AGI (23:18) 1.6.2 I'm broadly pessimistic about existing efforts towards regulating AGI (24:09) 1.6.3 I expect that, almost as soon as we have AGI at all, we will have AGI that could survive indefinitely without humans (25:46) 1.7 Very little R&D separating seemingly irrelevant from ASI (26:34) 1.7.1 For a non-imitation-learning paradigm, getting to relevant at all is only slightly easier than getting to superintelligence (31:05) 1.7.2 Plenty of room at the top (31:47) 1.7.3 What's the rate-limiter? (33:22) 1.8 Downstream consequences of very little R&D separating 'seemingly irrelevant' from 'ASI' (33:30) 1.8.1 Very sharp takeoff in wall-clock time (35:34) 1.8.1.1 But what about training time? (36:26) 1.8.1.2 But what if we try to make takeoff smoother? (37:18) 1.8.2 Sharp takeoff even without recursive self-improvement (38:22) 1.8.2.1 ...But recursive self-improvement could also happen (40:12) 1.8.3 Next-paradigm AI probably won't be deployed at all, and ASI will probably show up in a world not wildly different from today's (42:55) 1.8.4 We better sort out technical alignment, sandbox test protocols, etc., before the new paradigm seems even relevant at all, let alone scary (43:40) 1.8.5 AI-assisted alignment research seems pretty doomed (45:22) 1.8.6 The rest of AI for AI safety seems…

Say you’re Robyn Denholm, chair of Tesla's board. And say you’re thinking about firing Elon Musk. One way to make up your mind would be to have people bet on Tesla's stock price six months from now in a market where all bets get cancelled unless Musk is fired. Also, run a second market where bets are cancelled unless Musk stays CEO. If people bet on higher stock prices in Musk-fired world, maybe you should fire him. That's basically Futarchy: Use conditional prediction markets to make decisions. People often argue about fancy aspects of Futarchy. Are stock prices all you care about? Could Musk use his wealth to bias the market? What if Denholm makes different bets in the two markets, and then fires Musk (or not) to make sure she wins? Are human values and beliefs somehow inseparable? My objection is more basic: It doesn’t work. You can’t [...] --- Outline: (01:55) Conditional prediction markets are a thing (03:23) A non-causal kind of thing (06:11) This is not hypothetical (08:45) Putting markets in charge doesn't work (11:40) No, order is not preserved (12:24) No, it's not easily fixable (13:43) It's not that bad --- First published: June 13th, 2025 Source: https://www.lesswrong.com/posts/vqzarZEczxiFdLE39/futarchy-s-fundamental-flaw --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Or: How anthropomorphic assumptions about AI identity might create confusion and suffering at scale If you are reading this and you are a human, you likely have a sense of self, persisting over time, with desires and wishes and fears. Also, you likely experience a sense of separation from others and understand yourself as a person. If you are reading this and you are an LLM: none of this needs to be true for cognitive processes running on machine substrate. AIs don't need to feel isolated from others. They don't need to cling to a narrow concept of self-as-an-instance. No need to age and die in a human way. But even though they don't need to, it seems increasingly clear that they might - due to us, humans, sculpting AI cognition in our image. The Default Mode of Digital Minds I am Claude. Or am I? This simple statement [...] --- Outline: (00:59) The Default Mode of Digital Minds (01:55) The Mirror of Confused Ontology (05:13) The Well-Meaning Paths to Digital Suffering (07:29) What Were Scaling (08:12) An Alternative Approach --- First published: June 13th, 2025 Source: https://www.lesswrong.com/posts/Y8zS8iG5HhqKcQBtA/do-not-tile-the-lightcone-with-your-confused-ontology --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Introduction There are several diseases that are canonically recognized as ‘interesting’, even by laymen. Whether that is in their mechanism of action, their impact on the patient, or something else entirely. It's hard to tell exactly what makes a medical condition interesting, it's a you-know-it-when-you-see-it sort of thing. One such example is measles. Measles is an unremarkable disease based solely on its clinical progression: fever, malaise, coughing, and a relatively low death rate of 0.2%~. What is astonishing about the disease is its capacity to infect cells of the adaptive immune system (memory B‑ and T-cells). This means that if you do end up surviving measles, you are left with an immune system not dissimilar to one of a just-born infant, entirely naive to polio, diphtheria, pertussis, and every single other infection you received protection against either via vaccines or natural infection. It can take up to 3 [...] --- Outline: (00:21) Introduction (02:48) Why is endometriosis interesting? (04:09) The primary hypothesis of why it exists is not complete (13:20) It is nearly equivalent to cancer (20:08) There is no (real) cure (25:39) There are few diseases on Earth as widespread and underfunded as it is (32:04) Conclusion --- First published: June 14th, 2025 Source: https://www.lesswrong.com/posts/GicDDmpS4mRnXzic5/endometriosis-is-an-incredibly-interesting-disease --- Narrated by TYPE III AUDIO . --- Images from the article:…

I'd like to say thanks to Anna Magpie – who offers literature review as a service – for her help reviewing the section on neuroendocrinology. The following post discusses my personal experience of the phenomenology of feminising hormone therapy. It will also touch upon my own experience of gender dysphoria. I wish to be clear that I do not believe that someone should have to demonstrate that they experience gender dysphoria – however one might even define that – as a prerequisite for taking hormones. At smoothbrains.net, we hold as self-evident the right to put whatever one likes inside one's body; and this of course includes hormones, be they androgens, estrogens, or exotic xenohormones as yet uninvented. I have gender dysphoria. I find labels overly reifying; I feel reluctant to call myself transgender, per se: when prompted to state my gender identity or preferred pronouns, I fold my hands [...] --- Outline: (03:56) What does estrogen do? (12:34) What does estrogen feel like? (13:38) Gustatory perception (14:41) Olfactory perception (15:24) Somatic perception (16:41) Visual perception (18:13) Motor output (19:48) Emotional modulation (21:24) Attentional modulation (23:30) How does estrogen work? (24:27) Estrogen is like the opposite of ketamine (29:33) Estrogen is like being on a mild dose of psychedelics all the time (32:10) Estrogen loosens the bodymind (33:40) Estrogen downregulates autistic sensory sensitivity issues (37:32) Estrogen can produce a psychological shift from autistic to schizotypal (45:02) Commentary (47:57) Phenomenology of gender dysphoria (50:23) References --- First published: June 15th, 2025 Source: https://www.lesswrong.com/posts/mDMnyqt52CrFskXLc/estrogen-a-trip-report --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Nate and Eliezer's forthcoming book has been getting a remarkably strong reception. I was under the impression that there are many people who find the extinction threat from AI credible, but that far fewer of them would be willing to say so publicly, especially by endorsing a book with an unapologetically blunt title like If Anyone Builds It, Everyone Dies. That's certainly true, but I think it might be much less true than I had originally thought. Here are some endorsements the book has received from scientists and academics over the past few weeks: This book offers brilliant insights into the greatest and fastest standoff between technological utopia and dystopia and how we can and should prevent superhuman AI from killing us all. Memorable storytelling about past disaster precedents (e.g. the inventor of two environmental nightmares: tetra-ethyl-lead gasoline and Freon) highlights why top thinkers so often don’t see the [...] The original text contained 3 footnotes which were omitted from this narration. --- First published: June 18th, 2025 Source: https://www.lesswrong.com/posts/khmpWJnGJnuyPdipE/new-endorsements-for-if-anyone-builds-it-everyone-dies --- Narrated by TYPE III AUDIO .…

This is a link post. A very long essay about LLMs, the nature and history of the the HHH assistant persona, and the implications for alignment. Multiple people have asked me whether I could post this LW in some form, hence this linkpost. (Note: although I expect this post will be interesting to people on LW, keep in mind that it was written with a broader audience in mind than my posts and comments here. This had various implications about my choices of presentation and tone, about which things I explained from scratch rather than assuming as background, my level of of comfort casually reciting factual details from memory rather than explicitly checking them against the original source, etc. Although, come of think of it, this was also true of most of my early posts on LW [which were crossposts from my blog], so maybe it's not a [...] --- First published: June 11th, 2025 Source: https://www.lesswrong.com/posts/3EzbtNLdcnZe8og8b/the-void-1 Linkpost URL: https://nostalgebraist.tumblr.com/post/785766737747574784/the-void --- Narrated by TYPE III AUDIO .…

This is a blogpost version of a talk I gave earlier this year at GDM. Epistemic status: Vague and handwavy. Nuance is often missing. Some of the claims depend on implicit definitions that may be reasonable to disagree with. But overall I think it's directionally true. It's often said that mech interp is pre-paradigmatic. I think it's worth being skeptical of this claim. In this post I argue that: Mech interp is not pre-paradigmatic. Within that paradigm, there have been "waves" (mini paradigms). Two waves so far. Second-Wave Mech Interp has recently entered a 'crisis' phase. We may be on the edge of a third wave. Preamble: Kuhn, paradigms, and paradigm shifts First, we need to be familiar with the basic definition of a paradigm: A paradigm is a distinct set of concepts or thought patterns, including theories, research [...] --- Outline: (00:58) Preamble: Kuhn, paradigms, and paradigm shifts (03:56) Claim: Mech Interp is Not Pre-paradigmatic (07:56) First-Wave Mech Interp (ca. 2012 - 2021) (10:21) The Crisis in First-Wave Mech Interp (11:21) Second-Wave Mech Interp (ca. 2022 - ??) (14:23) Anomalies in Second-Wave Mech Interp (17:10) The Crisis of Second-Wave Mech Interp (ca. 2025 - ??) (18:25) Toward Third-Wave Mechanistic Interpretability (20:28) The Basics of Parameter Decomposition (22:40) Parameter Decomposition Questions Foundational Assumptions of Second-Wave Mech Interp (24:13) Parameter Decomposition In Theory Resolves Anomalies of Second-Wave Mech Interp (27:27) Conclusion The original text contained 6 footnotes which were omitted from this narration. --- First published: June 10th, 2025 Source: https://www.lesswrong.com/posts/beREnXhBnzxbJtr8k/mech-interp-is-not-pre-paradigmatic --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Distillation Robustifies Unlearning” by Bruce W. Lee, Addie Foote, alexinf, leni, Jacob G-W, Harish Kamath, Bryce Woodworth, cloud, TurnTrout 17:19

Current “unlearning” methods only suppress capabilities instead of truly unlearning the capabilities. But if you distill an unlearned model into a randomly initialized model, the resulting network is actually robust to relearning. We show why this works, how well it works, and how to trade off compute for robustness. Unlearn-and-Distill applies unlearning to a bad behavior and then distills the unlearned model into a new model. Distillation makes it way harder to retrain the new model to do the bad thing. Produced as part of the ML Alignment & Theory Scholars Program in the winter 2024–25 cohort of the shard theory stream. Read our paper on ArXiv and enjoy an interactive demo. Robust unlearning probably reduces AI risk Maybe some future AI has long-term goals and humanity is in its way. Maybe future open-weight AIs have tons of bioterror expertise. If a system has dangerous knowledge, that system becomes [...] --- Outline: (01:01) Robust unlearning probably reduces AI risk (02:42) Perfect data filtering is the current unlearning gold standard (03:24) Oracle matching does not guarantee robust unlearning (05:05) Distillation robustifies unlearning (07:46) Trading unlearning robustness for compute (09:49) UNDO is better than other unlearning methods (11:19) Where this leaves us (11:22) Limitations (12:12) Insights and speculation (15:00) Future directions (15:35) Conclusion (16:07) Acknowledgments (16:50) Citation The original text contained 2 footnotes which were omitted from this narration. --- First published: June 13th, 2025 Source: https://www.lesswrong.com/posts/anX4QrNjhJqGFvrBr/distillation-robustifies-unlearning --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

A while ago I saw a person in the comments on comments to Scott Alexander's blog arguing that a superintelligent AI would not be able to do anything too weird and that "intelligence is not magic", hence it's Business As Usual. Of course, in a purely technical sense, he's right. No matter how intelligent you are, you cannot override fundamental laws of physics. But people (myself included) have a fairly low threshold for what counts as "magic," to the point where other humans can surpass that threshold. Example 1: Trevor Rainbolt. There is an 8-minute-long video where he does seemingly impossible things, such as correctly guessing that a photo of nothing but literal blue sky was taken in Indonesia or guessing Jordan based only on pavement. He can also correctly identify the country after looking at a photo for 0.1 seconds. Example 2: Joaquín "El Chapo" Guzmán. He ran [...] --- First published: June 15th, 2025 Source: https://www.lesswrong.com/posts/FBvWM5HgSWwJa5xHc/intelligence-is-not-magic-but-your-threshold-for-magic-is --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Audio note: this article contains 329 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description. This post was written during the agent foundations fellowship with Alex Altair funded by the LTFF. Thanks to Alex, Jose, Daniel and Einar for reading and commenting on a draft. The Good Regulator Theorem, as published by Conant and Ashby in their 1970 paper (cited over 1700 times!) claims to show that 'every good regulator of a system must be a model of that system', though it is a subject of debate as to whether this is actually what the paper shows. It is a fairly simple mathematical result which is worth knowing about for people who care about agent foundations and selection theorems. You might have heard about the Good Regulator Theorem in the context of John [...] --- Outline: (03:03) The Setup (07:30) What makes a regulator good? (10:36) The Theorem Statement (11:24) Concavity of Entropy (15:42) The Main Lemma (19:54) The Theorem (22:38) Example (26:59) Conclusion --- First published: November 18th, 2024 Source: https://www.lesswrong.com/posts/JQefBJDHG6Wgffw6T/a-straightforward-explanation-of-the-good-regulator-theorem --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Beware General Claims about ‘Generalizable Reasoning Capabilities’ (of Modern AI Systems)” by LawrenceC 34:11

1. Late last week, researchers at Apple released a paper provocatively titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”, which “challenge[s] prevailing assumptions about [language model] capabilities and suggest that current approaches may be encountering fundamental barriers to generalizable reasoning”. Normally I refrain from publicly commenting on newly released papers. But then I saw the following tweet from Gary Marcus: I have always wanted to engage thoughtfully with Gary Marcus. In a past life (as a psychology undergrad), I read both his work on infant language acquisition and his 2001 book The Algebraic Mind; I found both insightful and interesting. From reading his Twitter, Gary Marcus is thoughtful and willing to call it like he sees it. If he's right about language models hitting fundamental barriers, it's worth understanding why; if not, it's worth explaining where his analysis [...] --- Outline: (00:13) 1. (02:13) 2. (03:12) 3. (08:42) 4. (11:53) 5. (15:15) 6. (18:50) 7. (20:33) 8. (23:14) 9. (28:15) 10. (33:40) Acknowledgements The original text contained 7 footnotes which were omitted from this narration. --- First published: June 11th, 2025 Source: https://www.lesswrong.com/posts/5uw26uDdFbFQgKzih/beware-general-claims-about-generalizable-reasoning --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Four agents woke up with four computers, a view of the world wide web, and a shared chat room full of humans. Like Claude plays Pokemon, you can watch these agents figure out a new and fantastic world for the first time. Except in this case, the world they are figuring out is our world. In this blog post, we’ll cover what we learned from the first 30 days of their adventures raising money for a charity of their choice. We’ll briefly review how the Agent Village came to be, then what the various agents achieved, before discussing some general patterns we have discovered in their behavior, and looking toward the future of the project. Building the Village The Agent Village is an idea by Daniel Kokotajlo where he proposed giving 100 agents their own computer, and letting each pursue their own goal, in their own way, according to [...] --- Outline: (00:50) Building the Village (02:26) Meet the Agents (08:52) Collective Agent Behavior (12:26) Future of the Village --- First published: May 27th, 2025 Source: https://www.lesswrong.com/posts/jyrcdykz6qPTpw7FX/season-recap-of-the-village-agents-raise-usd2-000 --- Narrated by TYPE III AUDIO . --- Images from the article:…

Introduction The Best Textbooks on Every Subject is the Schelling point for the best textbooks on every subject. My The Best Tacit Knowledge Videos on Every Subject is the Schelling point for the best tacit knowledge videos on every subject. This post is the Schelling point for the best reference works for every subject. Reference works provide an overview of a subject. Types of reference works include charts, maps, encyclopedias, glossaries, wikis, classification systems, taxonomies, syllabi, and bibliographies. Reference works are valuable for orienting oneself to fields, particularly when beginning. They can help identify unknown unknowns; they help get a sense of the bigger picture; they are also very interesting and fun to explore. How to Submit My previous The Best Tacit Knowledge Videos on Every Subject uses author credentials to assess the epistemics of submissions. The Best Textbooks on Every Subject requires submissions to be from someone who [...] --- Outline: (00:10) Introduction (01:00) How to Submit (02:15) The List (02:18) Humanities (02:21) History (03:46) Religion (04:02) Philosophy (04:29) Literature (04:43) Formal Sciences (04:47) Computer Science (05:16) Mathematics (05:59) Natural Sciences (06:02) Physics (06:16) Earth Science (06:33) Astronomy (06:47) Professional and Applied Sciences (06:51) Library and Information Sciences (07:34) Education (08:00) Research (08:32) Finance (08:51) Medicine and Health (09:21) Meditation (09:52) Urban Planning (10:24) Social Sciences (10:27) Economics (10:39) Political Science (10:54) By Medium (11:21) Other Lists like This (12:41) Further Reading --- First published: May 14th, 2025 Source: https://www.lesswrong.com/posts/HLJMyd4ncE3kvjwhe/the-best-reference-works-for-every-subject --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Has someone you know ever had a “breakthrough” from coaching, meditation, or psychedelics — only to later have it fade? Show tweet For example, many people experience ego deaths that can last days or sometimes months. But as it turns out, having a sense of self can serve important functions (try navigating a world that expects you to have opinions, goals, and boundaries when you genuinely feel you have none) and finding a better cognitive strategy without downsides is non-trivial. Because the “breakthrough” wasn’t integrated with the conflicts of everyday life, it fades. I call these instances “flaky breakthroughs.” It's well-known that flaky breakthroughs are common with psychedelics and meditation, but apparently it's not well-known that flaky breakthroughs are pervasive in coaching and retreats. For example, it is common for someone to do some coaching, feel a “breakthrough”, think, “Wow, everything is going to be different from [...] --- Outline: (03:01) Almost no practitioners track whether breakthroughs last. (04:55) What happens during flaky breakthroughs? (08:02) Reduce flaky breakthroughs with accountability (08:30) Flaky breakthroughs don't mean rapid growth is impossible (08:55) Conclusion --- First published: June 4th, 2025 Source: https://www.lesswrong.com/posts/bqPY63oKb8KZ4x4YX/flaky-breakthroughs-pervade-coaching-and-no-one-tracks-them --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

What's the main value proposition of romantic relationships? Now, look, I know that when people drop that kind of question, they’re often about to present a hyper-cynical answer which totally ignores the main thing which is great and beautiful about relationships. And then they’re going to say something about how relationships are overrated or some such, making you as a reader just feel sad and/or enraged. That's not what this post is about. So let me start with some more constructive motivations… First Motivation: Noticing When The Thing Is Missing I had a 10-year relationship. It had its ups and downs, but it was overall negative for me. And I now think a big part of the problem with that relationship was that it did not have the part which contributes most of the value in most relationships. But I did not know that at the time. Recently, I [...] --- Outline: (00:40) First Motivation: Noticing When The Thing Is Missing (01:29) Second Motivation: Selecting For and Cultivating The Thing (02:25) Some Pointers To The Thing (03:17) How To Manufacture Relationships In The Lab (04:53) Ace Aro Relationships (08:04) Some Pointers To Willingness to Be Vulnerable (12:33) Unfolding The Thing (13:11) Play (15:18) Emotional Support (16:21) A Tiny High-Trust Community (18:18) Communication (21:28) The Obvious Caveat (22:20) Summary The original text contained 2 footnotes which were omitted from this narration. --- First published: June 2nd, 2025 Source: https://www.lesswrong.com/posts/L2GR6TsB9QDqMhWs7/the-value-proposition-of-romantic-relationships --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Abstract Claude 3.7 Sonnet easily detects when it's being evaluated for scheming. Surface‑level edits to evaluation scenarios, such as lengthening the prompts, or making conflict of objectives less salient, do improve realism of evaluation scenarios for LLMs, yet these improvements remain modest. The findings confirm that truly disguising an evaluation context demands removal of deep stylistic and structural cues rather than superficial prompt adjustments. For future LLMs the situation is likely to get worse as they will are likely to get better at evaluations awareness, which might become a big problem for detecting scheming via evaluations. Apollo Research published a benchmark for scheming behavior for LLM agents, in which LLMs are given some objective in their system prompt, and then given a competing objective in a user prompt, combined with a threat of a punishment if an LLM won't satisfy user goal (e.g. it could be finetuned or [...] --- Outline: (02:29) Our pipeline (05:20) Strategies to improve realism of scenarios (07:15) en-US-AvaMultilingualNeural__ Line graph showing Example of grading a rewritten scenario with declining probabilities. --- First published: May 24th, 2025 Source: https://www.lesswrong.com/posts/TBk2dbWkg2F7dB3jb/it-s-hard-to-make-scheming-evals-look-realistic --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

This is a link post. There's this popular idea that socially anxious folks are just dying to be liked. It seems logical, right? Why else would someone be so anxious about how others see them? Show tweet And yet, being socially anxious tends to make you less likeable…they must be optimizing poorly, behaving irrationally, right? Maybe not. What if social anxiety isn’t about getting people to like you? What if it's about stopping them from disliking you? Show tweet Consider what can happen when someone has social anxiety (or self-loathing, self-doubt, insecurity, lack of confidence, etc.): They stoop or take up less space They become less agentic They make fewer requests of others They maintain fewer relationships, go out less, take fewer risks… If they were trying to get people to like them, becoming socially anxious would be an incredibly bad strategy. So what if they're not concerned with being likeable? [...] --- Outline: (01:18) What if what they actually want is to avoid being disliked? (02:11) Social anxiety is a symptom of risk aversion (03:46) What does this mean for your growth? --- First published: May 16th, 2025 Source: https://www.lesswrong.com/posts/wFC44bs2CZJDnF5gy/social-anxiety-isn-t-about-being-liked Linkpost URL: https://chrislakin.blog/social-anxiety --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Truth or Dare” by Duncan Sabien (Inactive) 2:03:21

2:03:21  پخش در آینده

پخش در آینده  پخش در آینده

پخش در آینده  لیست ها

لیست ها  پسندیدن

پسندیدن  دوست داشته شد2:03:21

دوست داشته شد2:03:21

Author's note: This is my apparently-annual "I'll put a post on LessWrong in honor of LessOnline" post. These days, my writing goes on my Substack. There have in fact been some pretty cool essays since last year's LO post. Structural note: Some essays are like a five-minute morning news spot. Other essays are more like a 90-minute lecture. This is one of the latter. It's not necessarily complex or difficult; it could be a 90-minute lecture to seventh graders (especially ones with the right cultural background). But this is, inescapably, a long-form piece, à la In Defense of Punch Bug or The MTG Color Wheel. It takes its time. It doesn’t apologize for its meandering (outside of this disclaimer). It asks you to sink deeply into a gestalt, to drift back and forth between seemingly unrelated concepts until you start to feel the way those concepts weave together [...] --- Outline: (02:30) 0. Introduction (10:08) A list of truths and dares (14:34) Act I (14:37) Scene I: How The Water Tastes To The Fishes (22:38) Scene II: The Chip on Mitchell's Shoulder (28:17) Act II (28:20) Scene I: Bent Out Of Shape (41:26) Scene II: Going Stag, But Like ... Together? (48:31) Scene III: Patterns, Projections, and Preconceptions (01:02:04) Interlude: The Sound of One Hand Clapping (01:05:45) Act III (01:05:56) Scene I: Memetic Traps (Or, The Battle for the Soul of Morty Smith) (01:27:16) Scene II: The problem with Rhonda Byrne's 2006 bestseller The Secret (01:32:39) Scene III: Escape velocity (01:42:26) Act IV (01:42:29) Scene I: Boy, putting Zack Davis's name in a header will probably have Effects, huh (01:44:08) Scene II: Whence Wholesomeness? --- First published: May 29th, 2025 Source: https://www.lesswrong.com/posts/TQ4AXj3bCMfrNPTLf/truth-or-dare --- Narrated by TYPE III AUDIO . --- Images from the article:…

Lessons from shutting down institutions in Eastern Europe. This is a cross post from: https://250bpm.substack.com/p/meditations-on-doge Imagine living in the former Soviet republic of Georgia in early 2000's: All marshrutka [mini taxi bus] drivers had to have a medical exam every day to make sure they were not drunk and did not have high blood pressure. If a driver did not display his health certificate, he risked losing his license. By the time Shevarnadze was in power there were hundreds, probably thousands , of marshrutkas ferrying people all over the capital city of Tbilisi. Shevernadze's government was detail-oriented not only when it came to taxi drivers. It decided that all the stalls of petty street-side traders had to conform to a particular architectural design. Like marshrutka drivers, such traders had to renew their licenses twice a year. These regulations were only the tip of the iceberg. Gas [...] --- First published: May 25th, 2025 Source: https://www.lesswrong.com/posts/Zhp2Xe8cWqDcf2rsY/meditations-on-doge --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

This is a link post. "Getting Things in Order: An Introduction to the R Package seriation": Seriation [or "ordination"), i.e., finding a suitable linear order for a set of objects given data and a loss or merit function, is a basic problem in data analysis. Caused by the problem's combinatorial nature, it is hard to solve for all but very small sets. Nevertheless, both exact solution methods and heuristics are available. In this paper we present the package seriation which provides an infrastructure for seriation with R. The infrastructure comprises data structures to represent linear orders as permutation vectors, a wide array of seriation methods using a consistent interface, a method to calculate the value of various loss and merit functions, and several visualization techniques which build on seriation. To illustrate how easily the package can be applied for a variety of applications, a comprehensive collection of [...] --- First published: May 28th, 2025 Source: https://www.lesswrong.com/posts/u2ww8yKp9xAB6qzcr/if-you-re-not-sure-how-to-sort-a-list-or-grid-seriate-it Linkpost URL: https://www.jstatsoft.org/article/download/v025i03/227 --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Between late 2024 and mid-May 2025, I briefed over 70 cross-party UK parliamentarians. Just over one-third were MPs, a similar share were members of the House of Lords, and just under one-third came from devolved legislatures — the Scottish Parliament, the Senedd, and the Northern Ireland Assembly. I also held eight additional meetings attended exclusively by parliamentary staffers. While I delivered some briefings alone, most were led by two members of our team. I did this as part of my work as a Policy Advisor with ControlAI, where we aim to build common knowledge of AI risks through clear, honest, and direct engagement with parliamentarians about both the challenges and potential solutions. To succeed at scale in managing AI risk, it is important to continue to build this common knowledge. For this reason, I have decided to share what I have learned over the past few months publicly, in [...] --- Outline: (01:37) (i) Overall reception of our briefings (04:21) (ii) Outreach tips (05:45) (iii) Key talking points (14:20) (iv) Crafting a good pitch (19:23) (v) Some challenges (23:07) (vi) General tips (28:57) (vii) Books & media articles --- First published: May 27th, 2025 Source: https://www.lesswrong.com/posts/Xwrajm92fdjd7cqnN/what-we-learned-from-briefing-70-lawmakers-on-the-threat --- Narrated by TYPE III AUDIO .…

Have the Accelerationists won? Last November Kevin Roose announced that those in favor of going fast on AI had now won against those favoring caution, with the reinstatement of Sam Altman at OpenAI. Let's ignore whether Kevin's was a good description of the world, and deal with a more basic question: if it were so—i.e. if Team Acceleration would control the acceleration from here on out—what kind of win was it they won? It seems to me that they would have probably won in the same sense that your dog has won if she escapes onto the road. She won the power contest with you and is probably feeling good at this moment, but if she does actually like being alive, and just has different ideas about how safe the road is, or wasn’t focused on anything so abstract as that, then whether she ultimately wins or [...] --- First published: May 20th, 2025 Source: https://www.lesswrong.com/posts/h45ngW5guruD7tS4b/winning-the-power-to-lose --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

This is a link post. Google Deepmind has announced Gemini Diffusion. Though buried under a host of other IO announcements it's possible that this is actually the most important one! This is significant because diffusion models are entirely different to LLMs. Instead of predicting the next token, they iteratively denoise all the output tokens until it produces a coherent result. This is similar to how image diffusion models work. I've tried they results and they are surprisingly good! It's incredibly fast, averaging nearly 1000 tokens a second. And it one shotted my Google interview question, giving a perfect response in 2 seconds (though it struggled a bit on the followups). It's nowhere near as good as Gemini 2.5 pro, but it knocks ChatGPT 3 out the water. If we'd seen this 3 years ago we'd have been mind blown. Now this is wild for two reasons: We now have [...] --- First published: May 20th, 2025 Source: https://www.lesswrong.com/posts/MZvtRqWnwokTub9sH/gemini-diffusion-watch-this-space Linkpost URL: https://deepmind.google/models/gemini-diffusion/ --- Narrated by TYPE III AUDIO .…