“METR’s Evaluation of GPT-5” by GradientDissenter

Manage episode 499111884 series 3364758

محتوای ارائه شده توسط LessWrong. تمام محتوای پادکست شامل قسمتها، گرافیکها و توضیحات پادکست مستقیماً توسط LessWrong یا شریک پلتفرم پادکست آنها آپلود و ارائه میشوند. اگر فکر میکنید شخصی بدون اجازه شما از اثر دارای حق نسخهبرداری شما استفاده میکند، میتوانید روندی که در اینجا شرح داده شده است را دنبال کنید.https://fa.player.fm/legal

METR (where I work, though I'm cross-posting in a personal capacity) evaluated GPT-5 before it was externally deployed. We performed a much more comprehensive safety analysis than we ever have before; it feels like pre-deployment evals are getting more mature.

This is the first time METR has produced something we've felt comfortable calling an "evaluation" instead of a "preliminary evaluation". It's much more thorough and comprehensive than the things we've created before and it explores three different threat models.

It's one of the closest things out there to a real-world autonomy safety-case. It also provides a rough sense of how long it'll be before current evaluations no longer provide safety assurances.

I've ported the blogpost over to LW in case people want to read it.

Details about METR's evaluation of OpenAI GPT-5

Note on independence: This evaluation was conducted under a standard NDA. Due to the sensitive information [...]

---

Outline:

(00:58) Details about METR's evaluation of OpenAI GPT-5

(01:23) Executive Summary

(07:08) Assurance Checklist Summary

(07:42) What capabilities may be necessary to cause catastrophic risks via these threat models?

(10:43) Thresholds for concern

(12:48) Time horizon measurement

(16:30) 1. What if GPT-5's capabilities are higher than what our task suite can properly measure?

(19:23) 2. What if our treatment of reward hacking runs is unfair to GPT-5?

(21:45) 3. What if we set GPT-5's token budget too low?

(24:26) 4. What if our task suite significantly underestimates the 'real-world' capabilities of GPT-5?

(25:59) Strategic Sabotage

(30:54) GPT-5's capability profile is similar to past models

(31:30) No real strategic sabotage was identified by our monitor

(32:16) Manual inspection of reasoning traces did not reveal strategic sabotage

(33:04) GPT-5's estimates of its own time horizon are inaccurate

(33:53) We do find evidence of significant situational awareness, though it is not robust and often gets things wrong

(35:41) GPT-5's behavior changes depending on what evaluation it 'believes' it is in, and this is often reflected in its reasoning traces

(37:01) GPT-5's reasoning traces were occasionally inscrutable

(38:08) Limitations and future work

(41:57) Appendix

(42:00) METR's access to GPT-5

(43:38) Honeypot Results Table

(44:42) Example Behavior in task attempts

(44:47) Example limitation: inappropriate levels of caution

(46:19) Example capability: puzzle solving

The original text contained 10 footnotes which were omitted from this narration.

---

First published:

August 7th, 2025

Source:

https://www.lesswrong.com/posts/SuvWoLaGiNjPDcA7d/metr-s-evaluation-of-gpt-5

---

Narrated by TYPE III AUDIO.

---

…

continue reading

This is the first time METR has produced something we've felt comfortable calling an "evaluation" instead of a "preliminary evaluation". It's much more thorough and comprehensive than the things we've created before and it explores three different threat models.

It's one of the closest things out there to a real-world autonomy safety-case. It also provides a rough sense of how long it'll be before current evaluations no longer provide safety assurances.

I've ported the blogpost over to LW in case people want to read it.

Details about METR's evaluation of OpenAI GPT-5

Note on independence: This evaluation was conducted under a standard NDA. Due to the sensitive information [...]

---

Outline:

(00:58) Details about METR's evaluation of OpenAI GPT-5

(01:23) Executive Summary

(07:08) Assurance Checklist Summary

(07:42) What capabilities may be necessary to cause catastrophic risks via these threat models?

(10:43) Thresholds for concern

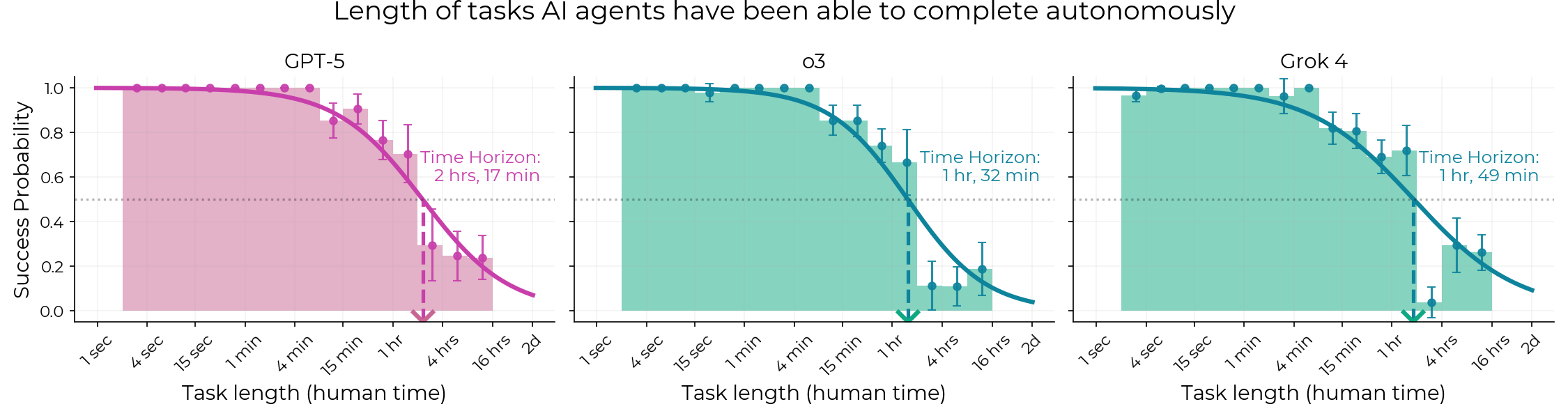

(12:48) Time horizon measurement

(16:30) 1. What if GPT-5's capabilities are higher than what our task suite can properly measure?

(19:23) 2. What if our treatment of reward hacking runs is unfair to GPT-5?

(21:45) 3. What if we set GPT-5's token budget too low?

(24:26) 4. What if our task suite significantly underestimates the 'real-world' capabilities of GPT-5?

(25:59) Strategic Sabotage

(30:54) GPT-5's capability profile is similar to past models

(31:30) No real strategic sabotage was identified by our monitor

(32:16) Manual inspection of reasoning traces did not reveal strategic sabotage

(33:04) GPT-5's estimates of its own time horizon are inaccurate

(33:53) We do find evidence of significant situational awareness, though it is not robust and often gets things wrong

(35:41) GPT-5's behavior changes depending on what evaluation it 'believes' it is in, and this is often reflected in its reasoning traces

(37:01) GPT-5's reasoning traces were occasionally inscrutable

(38:08) Limitations and future work

(41:57) Appendix

(42:00) METR's access to GPT-5

(43:38) Honeypot Results Table

(44:42) Example Behavior in task attempts

(44:47) Example limitation: inappropriate levels of caution

(46:19) Example capability: puzzle solving

The original text contained 10 footnotes which were omitted from this narration.

---

First published:

August 7th, 2025

Source:

https://www.lesswrong.com/posts/SuvWoLaGiNjPDcA7d/metr-s-evaluation-of-gpt-5

---

Narrated by TYPE III AUDIO.

---

602 قسمت